Apache Spark is becoming very popular among organizations looking to leverage its fast, in-memory computing capability for big-data processing. This article is for beginners to get started with Spark Setup on Eclipse/Scala IDE and getting familiar with Spark terminologies in general –

- Winutils.exe In The Hadoop Binaries

- Winutils.exe Hadoop 2.7

- Winutils.exe Hadoop

- Winutils.exe Hadoop Download

- Winutils.exe

Hadoop Winutils License: Apache: Date (Dec 29, 2014) Files: pom (434 bytes) zip (1.0 MB) View All: Repositories: Mapr: Note: There is a new version for this artifact.

- Thank you for your patience, i downloaded the winutils.exe and i created a HADOOPHOME and i put it in Path. When i executed my job, they load my files and full this.

- 2018-06-04 20:23:33 ERROR Shell:397 - Failed to locate the winutils binary in the hadoop binary path java.io.IOException: Could not locate executable null bin winutils.exe in the Hadoop binaries.

- Hadoop Winutils Utility for PySpark. One of the issues that the console shows is the fact that PySpark is reporting an I/O exception from the Java underlying library. And what it is saying is that it could not locate the executable when winutils. This executables is a mock program which mimic Hadoop distribution file system in windows machine.

- This guide describes the native hadoop library and includes a small discussion about native shared libraries. Note: Depending on your environment, the term “native libraries” could refer to all.so’s you need to compile; and, the term “native compression” could refer to all.so’s you need to compile that are specifically related to compression.

Hope you have read the previous article on RDD basics, to get a basic understanding of Spark RDD.

Tools Used :

- Scala IDE for Eclipse – Download the latest version of Scala IDE from here. Here, I used Scala IDE 4.7.0 Release, which support both Scala and Java

- Scala Version – 2.11 ( make sure scala compiler is set to this version as well)

- Spark Version 2.2 ( provided in maven dependency)

- Java Version 1.8

- Maven Version 3.3.9 ( Embedded in Eclipse)

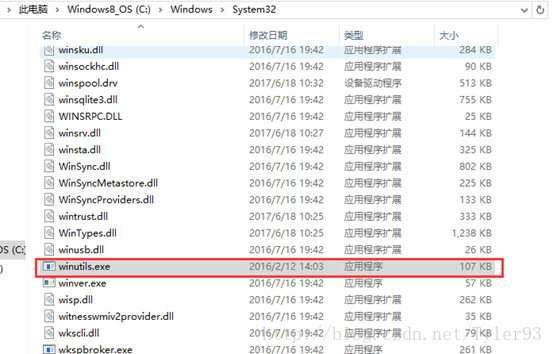

- winutils.exe

For running in Windows environment , you need hadoop binaries in windows format. winutils provides that and we need to set hadoop.home.dir system property to bin path inside which winutils.exe is present. You can download winutils.exehere and place at path like this – c:/hadoop/bin/winutils.exe . Read this for more information.

Creating a Sample Application in Eclipse –

In Scala IDE, create a new Maven Project –

Replace POM.XML as below –

POM.XML

For creating a Java WordCount program, create a new Java Class and copy the code below –

Java Code for WordCount

Winutils.exe In The Hadoop Binaries

import java.util.Arrays;

import org.apache.spark.SparkConf;

import org.apache.spark.api.java.JavaPairRDD;

import org.apache.spark.api.java.JavaRDD;

import org.apache.spark.api.java.JavaSparkContext;

import scala.Tuple2;

public class JavaWordCount {

public static void main(String[] args) throws Exception {

String inputFile = “src/main/resources/input.txt”;

//To set HADOOP_HOME.

System.setProperty(“hadoop.home.dir”, “c://hadoop//”);

//Initialize Spark Context

JavaSparkContext sc = new JavaSparkContext(new SparkConf().setAppName(“wordCount”).setMaster(“local[4]”));

// Load data from Input File.

JavaRDD<String> input = sc.textFile(inputFile);

// Split up into words.

JavaPairRDD<String, Integer> counts = input.flatMap(line -> Arrays.asList(line.split(” “)).iterator())

.mapToPair(word -> new Tuple2<>(word, 1)).reduceByKey((a, b) -> a + b);

System.out.println(counts.collect());

sc.stop();

sc.close();

}

}

Scala Version

For running the Scala version of WordCount program in scala, create a new Scala Object and use the code below –

You may need to set project as scala project to run this, and make sure scala compiler version matches Scala version in your Spark dependency, by setting in build path –

import org.apache.spark.SparkConf

import org.apache.spark.SparkContext

Winutils.exe Hadoop 2.7

object ScalaWordCount {

def main(args: Array[String]) {

Winutils.exe Hadoop

//To set HADOOP_HOME.

System.setProperty(“hadoop.home.dir”, “c://hadoop//”);

// create Spark context with Spark configuration

val sc = new SparkContext(new SparkConf().setAppName(“Spark WordCount”).setMaster(“local[4]”))

Winutils.exe Hadoop Download

//Load inputFile

val inputFile = sc.textFile(“src/main/resources/input.txt”)

val counts = inputFile.flatMap(line => line.split(” “)).map(word => (word, 1)).reduceByKey((a, b) => a + b)

counts.foreach(println)

sc.stop()

}

}

So, your final setup will look like this –

Running the code in Eclipse

You can run the above code in Scala or Java as simple Run As Scala or Java Application in eclipse to see the output.

Winutils.exe

Output

Now you should be able to see the word count output, along with log lines generated using default Spark log4j properties.

In the next post, I will explain how you can open Spark WebUI and look at various stages, tasks on Spark code execution internally. Victor bug ult doing 1k dmgtreedallas run.

You may also be interested in some other BigData posts –

- Spark ; How to Run Spark Applications on Windows